This SuperDataScience episode hosted by our Chief Data Scientist, Jon Krohn, is all about a rumored new model out of OpenAI called Q* (pronounced “Q star”) that has been causing quite a stir, both for its purported role in Altmangate and its implications for Artificial General Intelligence (AGI).

Key context:

• Q* is reported to have advanced capabilities in solving complex math problems expressed in natural language, indicating a significant leap in A.I.

• The rumors about Q* emerged during OpenAI’s corporate drama involving the firing and re-hiring of CEO Sam Altman.

• Reports suggested a connection between Q*’s development and the OpenAI upheaval, with staff expressing concerns about its potential dangers to humanity (no definitive evidence links Q* to the OpenAI CEO controversy, however, leaving its role in the incident ambiguous).

Research overview:

• OpenAI’s recent published research on solving grade-school word-based math problems (e.g., “The cafeteria had 23 apples. They used 20 for lunch and bought 6 more. How many apples do they have?”) hints at broader implications of step-by-step reasoning in A.I.

• While today’s Large Language Models (LLMs) show better results on logical problems when we use chain-of-thought prompting (“work through the problem step by step”), the contemporary LLMs do so linearly (they don’t go back to correct themselves or explore alternative intermediate steps), which limits their capability.

• To develop a model that can be trained and evaluated at each intermediate step, OpenAI gathered tons of human feedback on math-word problems, amassing a dataset of 800,000 individual intermediate steps across 75,000 problems.

• Their approach involves an LLM generating solutions at each step and a second model acting as a verifier.

The Q* connection:

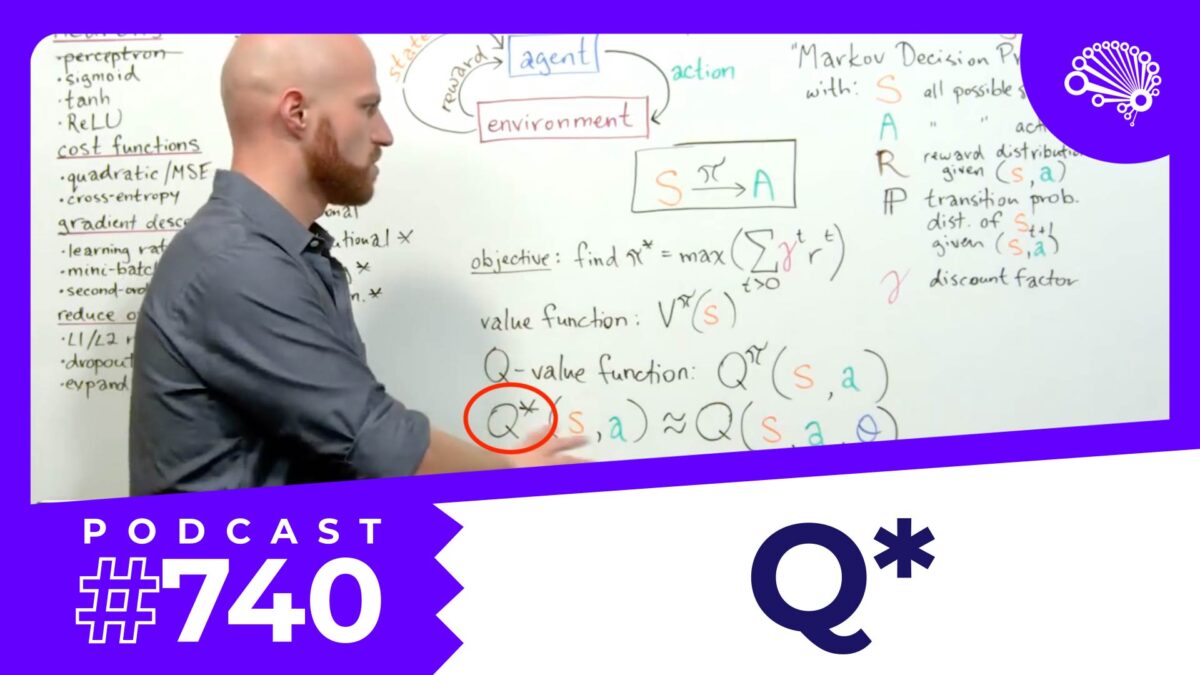

• The above research merges LLM reasoning abilities with search-tree methods, inspired by Google DeepMind’s AlphaGo algorithm and its ilk.

• The decades-old Q* concept is used for training models to simulate and evaluate prospective moves, a concept from reinforcement learning.

• Q*’s potential for automated self-play could lead to significant advancements in AGI, particularly by reducing reliance on (expensive) human-generated training data.

Implications:

• Q* could yield significant societal benefits (e.g., by solving mathematical proofs humans can’t or discovering new physics), albeit with potentially high inference costs.

• Q* raises concerns about security and the unresolved challenges in achieving AGI.

• While Q* isn’t the final leap towards AGI, it would represent a major milestone in general reasoning abilities.

The SuperDataScience podcast is available on all major podcasting platforms, YouTube, and at SuperDataScience.com.