One of our Nebula co-founders, Ed Donner, has carried out a fascinating project: He fine-tuned an open-source LLM on his text-message history, eerily effectively simulating not only himself but anyone who’s messaged him >1000 times.

The Strategy

1. Get the Messages:

Ed used a utility called iMazing to download all his SMS / iMessage and WhatsApp conversations. He filtered out group chats, people not in his contacts, and people he rarely message. This yielded 240,805 messages with 288 people.

2. Prepare the Dataset

Ed created his training and test datasets by grouping into chunks of messages with the same person, packing as many messages as possible into each chunk without exceeding 200 tokens. This resulted in 25,087 chunks of data, with each chunk containing a prompt and about 7-10 messages.

3. Hyper-parameter Search

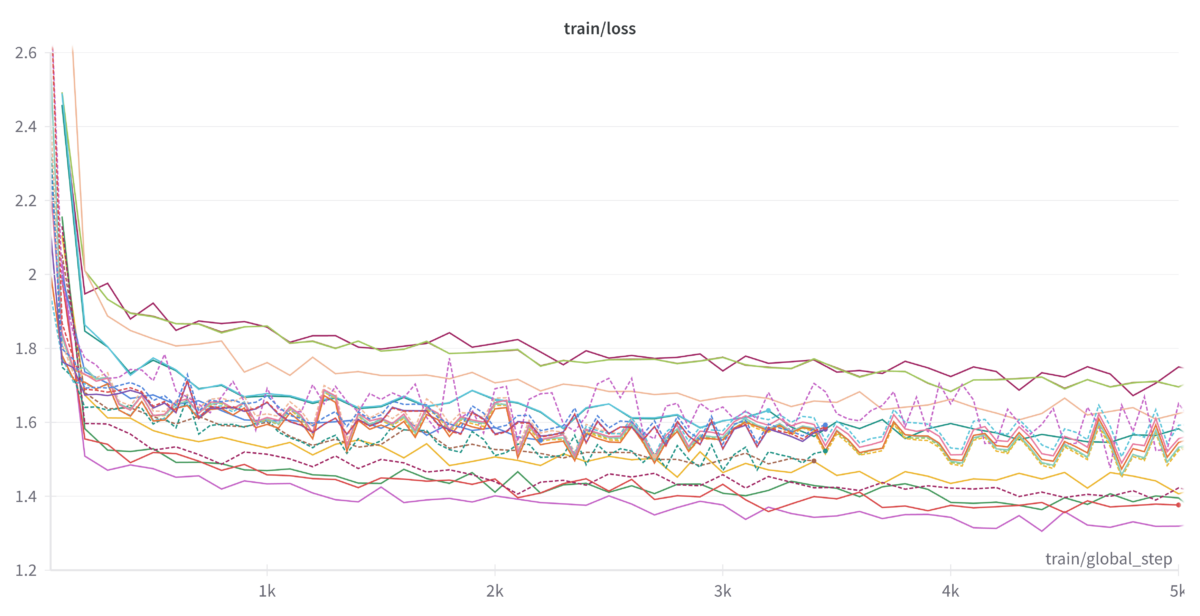

Ed started with the 7B parameter Llama 2 and fine-tuned using QLoRA on V100 VMs. He found conflicting advice all over the internets on QLoRA hyper-parameters and ended up doing a pretty extensive search. Later he upgraded to 13B parameters with a marked improvement in results.

4. Back to the Data

Then Ed returned to step 2. He worked on better ways to format the training data and on improvements to the prompt. He was thrown off at first because the training and eval loss actually increased, but the results were absolutely improving.

5. Generation

Finally, Ed used Hugging Face’s Text Generation to write conversations, either playing the role of him, or one of his contacts, or acting as both sides. He experimented with penalties for repetition, token suppression, beam search and more.

Initial Disappointment

Ed’s early attempts yielded similar results to his predecessors. The LLM would generate conversations that seemed to be surreal parodies of his life. His work colleagues would endlessly reschedule meetings earlier and later; him and his partner would relentlessly debate their lunch options. Efforts to encourage the model to be more diverse only resulted in increasingly unappetizing lunch alternatives.

But Then…

To Ed’s enormous delight, persistence paid off, largely after working on the prompt, the input data format and the generation approach. The LLM has become highly effective at imitating him. To his surprise, it can also convincingly act as many of Ed’s friends — really anyone with whom he’s exchanged at least 1,000 messages.

Ed was startled by how real the conversations seem, and how they reflect the nuances of his different relationships. The model isn’t always on point – it still sometimes gets stuck in loops or goes awry – but he would say more than half of the conversations would pass a Turing Test in that he wouldn’t be able to tell you if real or generated. Ed has actually been so surprised that he’s sometimes searched his text history in case the model is regurgitating real conversations (it’s not).

What’s Next?

Ed will be writing a series of posts on his approach so that others can experiment. Here is part 1, followed by part 2.

You can read more about Ed’s projects at edwarddonner.com.